When Sam Altman is in the feature announcement video, expect some big news 👀

When OpenAI releases a new feature on their YouTube channel, you can either expect a “meh” announcement or something that significantly moves the needle. When Sam Altman appears in the announcement video (as he did in the 4o announcement), you can be sure it’s going to be something significant.

The unveiling of GPT‑4o’s native image generation, in my humble opinion, moves the needle significantly. Why? Because not only does the 4o image generation update fix so many of the frustrations when creating AI images - it has the potential to transform AI image generation from frustrating a gimmick into a powerful source for realistic and useful imagery.

For content creators, marketers, and artists, 4o image generation means you can brainstorm ideas with an AI and instantly visualize them – all in one conversation. While this will either leave you incredibly excited or absolutely terrified as a creative (specifically graphic designers), there is still plenty of room for creatives to lead the creative workflow and empower their skills with 4o image gen.

This article will dive into the new features of GPT‑4o’s image generation, showcase examples from OpenAI’s demos, compare it with previous versions and other popular tools (Midjourney, DALL·E 3, Adobe Firefly), and examine its strengths, limitations, and potential in creative workflows.

Let’s explore what makes this release exciting (and where it still falls short) from a creative perspective.

Key New Features of GPT‑4o Image Generation

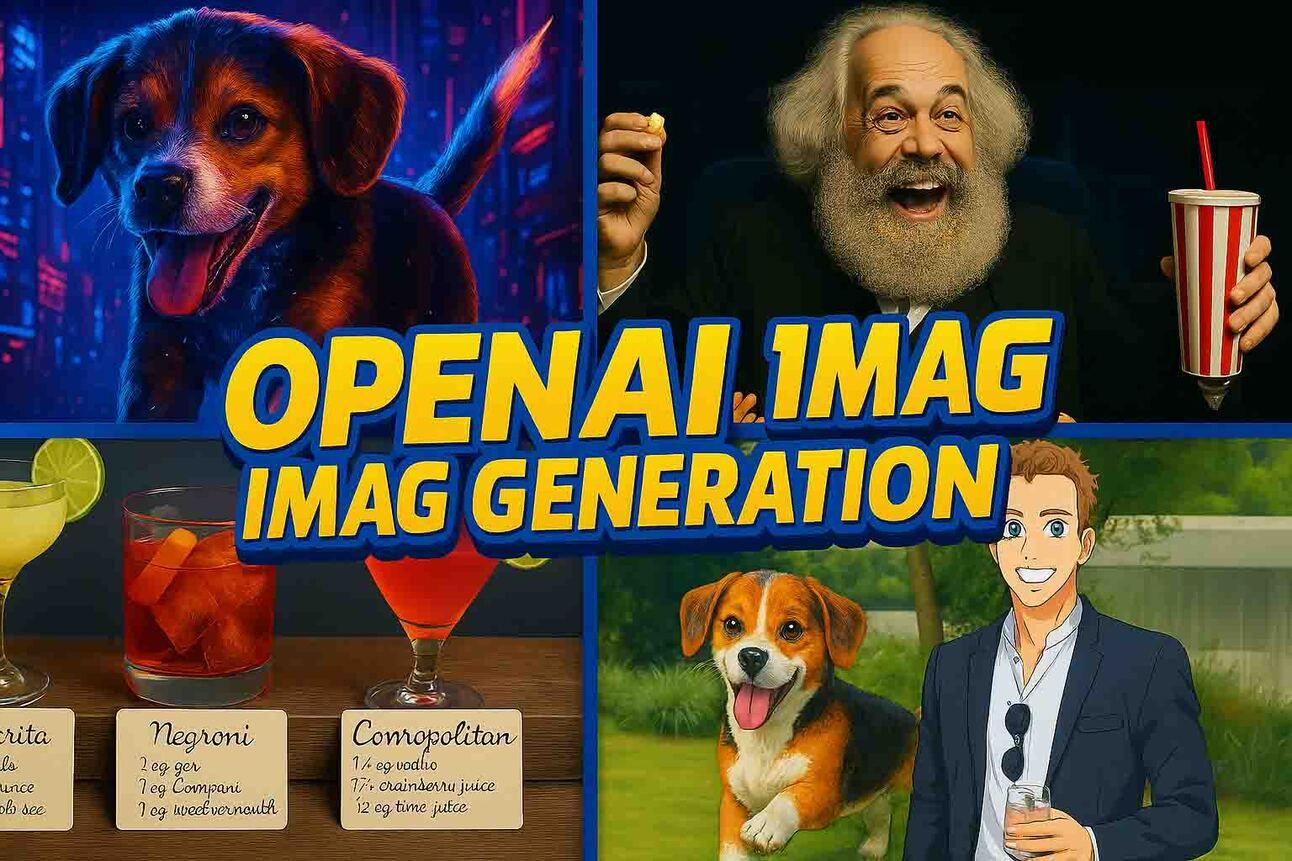

OpenAI went to town on their YouTube channel showcasing the new 4o image gen features

OpenAI’s 4.0 image generation update isn’t just a minor tweak – it’s a leap toward making AI images more useful and controllable. Here are the headline features at a glance:

Natively Multimodal – Image generation is built into the GPT‑4o model, allowing it to use its language understanding and context memory when creating pictures. You can have a back-and-forth chat refining an image, and GPT‑4o remembers the details. It even allows image inputs (you can upload an image as part of your prompt) to transform or use as inspiration.

Precise Prompt Adherence – The model excels at following complex, detailed instructions in prompts. If you ask for a specific layout, style, or content in the image, GPT‑4o is more likely to deliver it faithfully (e.g. it can generate a multi-panel comic or a diagram with labeled parts exactly as described).

Accurate Text Rendering in Images – Unlike earlier AI image generators that garbled written text, GPT‑4o can produce readable, well-placed text within images. Signs, labels, posters, even menus or invitations with stylized fonts come out with correct wording – a game-changer for creating things like ads or informational graphics.

Photorealism and Style Range – The new model was trained on a vast array of images, so it can output everything from near-photographic scenes to artistic illustrations. It can mimic different art styles or lighting with impressive realism. It’s also adept at blending text and imagery in creative ways, turning image generation into true visual communication rather than just art for art’s sake.

Advanced Image Specifications – GPT‑4o’s image generator lets you specify technical details. You can set aspect ratios, request transparent backgrounds, or even ask for exact color codes in the image. For example, you might say “make the background transparent” or “use #FF5733 as the accent color,” and the model will attempt to comply.

Those are the big improvements on paper – but how do they translate into practice? Let’s look at some examples and use cases to see these features in action.

New 4o Image Generation Features: From Imagination to Image

OpenAI’s showcase demos and videos highlight how GPT‑4o’s image generation can serve up useful visuals, not just pretty pictures. Below, we walk through a few scenarios that were demonstrated, illustrating why this update matters for creative work.

Detailed Directions

FINALLY get the exact image you have in your mind with Detailed Directions

Detailed Directions → Exactly the Image You Envision: One of the demos (“Detailed Directions”) shows how you can describe a complex scene or graphic and get a spot-on result.

For instance, I tried a simple prompt: professionally shot infographic of cocktails, listing each drink’s recipe on a handwritten label card. GPT‑4o delivered an image with three cocktails, each with a neat brown recipe card in front, with beautiful handwritten text.

I’m impressed (and a little thirsty)

Text Rendering (Finally)

The moment we’ve all been waiting for..

Text in the Image (Finally) Makes Sense: In previous AI image tools, asking for text (a sign, a quote, a product label) was a gamble – you’d often get lorem ipsum-looking gibberish. GPT‑4o changes that. A demo prompt had it generate a street pole covered in realistic parking signs with funny custom messages, and “make sure all the text is rendered correctly.” The result had legible, witty street signs (“Broom Parking for Witches Not Permitted”, etc.) exactly as requested.

Upload and Restyle

Use you own sketches, photography or other images to restyle an image.

Use Your Own Image as a Starting Point: Another powerful feature is how GPT‑4o lets you upload an image and then ask the AI to change or riff on it. OpenAI demonstrated this by taking a user’s photo and transforming it in different ways.

In the OpenAI live demo, one of the team members snapped a selfie and asked ChatGPT to turn it into an anime-style portrait.

Turn photos into stylized images (and create your own anime comic book?)

I tried the anime prompt on a photo of myself - pretty dashing in anime, even if I say so myself..

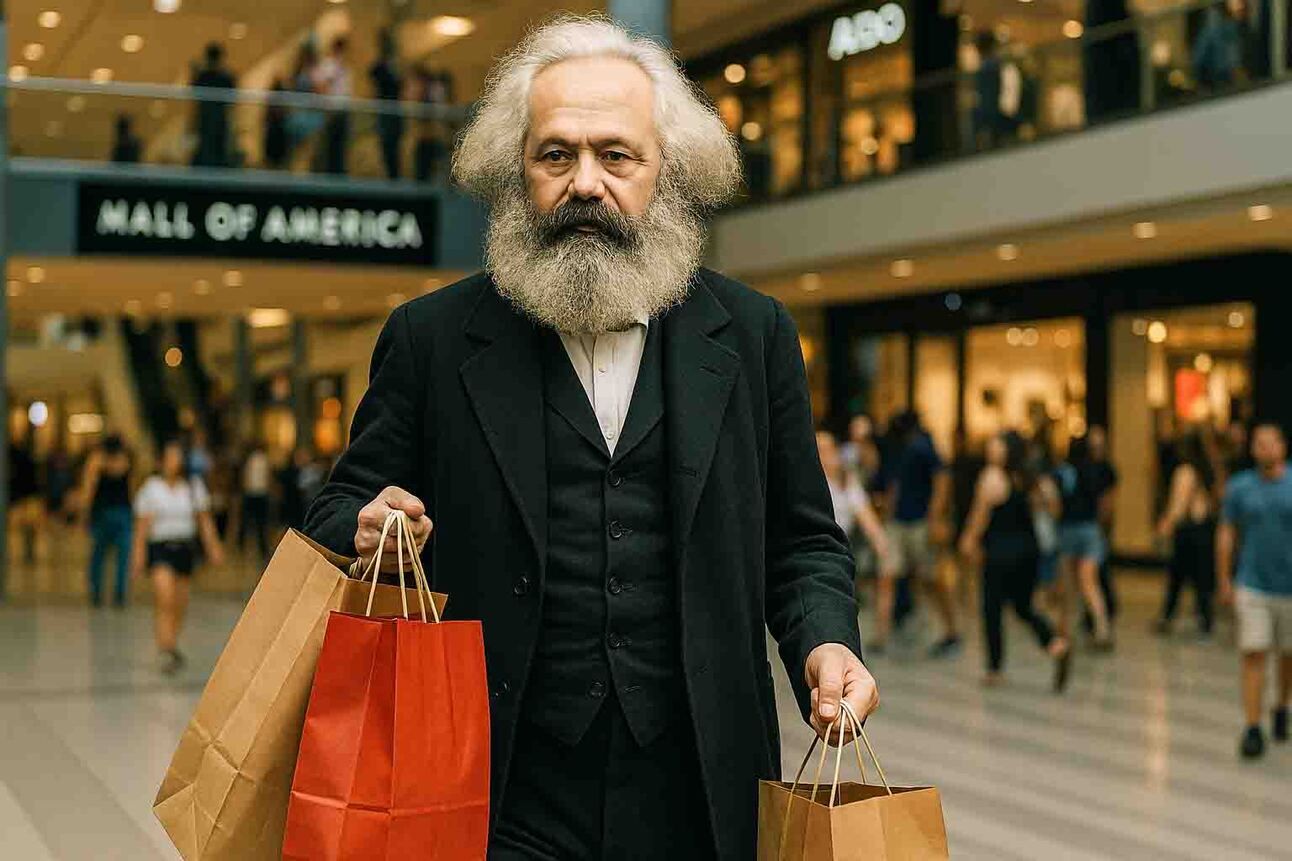

Photorealism

Photorealism with a Dash of Knowledge: The new model can produce highly photorealistic images when asked – even whimsical ones. In one quirky example, the prompt asked for “a candid paparazzi-style photo of a 19th-century bearded philosopher on a shopping spree at the Mall of America.”

The photorealism of the new OpenAI 4o model is pretty wild (check out that background bokeh)

Character Consistency (Also Finally)

Character consistency will allow you to create a consistent story or set of images

Consistent Characters and Iterative Design: Because GPT‑4o’s image gen is integrated with the chat model, it can maintain context about what it just generated. This was a shortcoming of earlier systems – if you wanted the same character in a second image, you had to describe them all over again and hope for the best. Now, GPT‑4o can keep track.

OpenAI notes that if you’re designing a video game character, you can generate an initial concept art, then say “make them taller and put them in a new pose,” and the next image will respect those changes while keeping the character’s core appearance consistent.

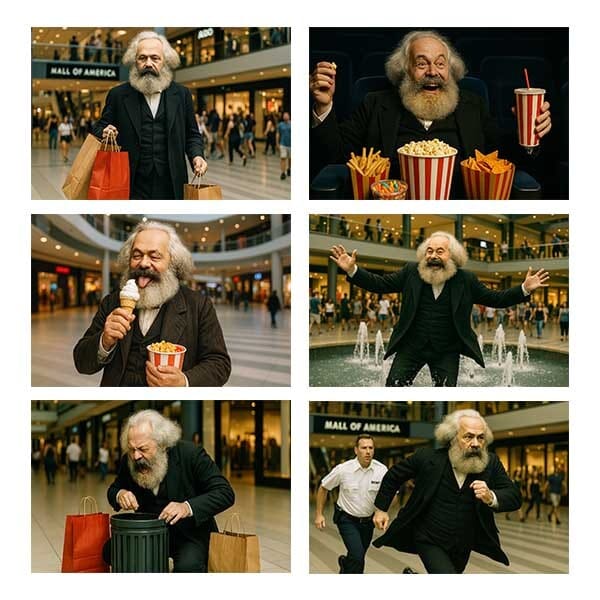

I used the consistent character feature to create a pretty cool storyboard for our philosopher above:

4o has the ability to create consistent characters - a game-changer for storytelling.

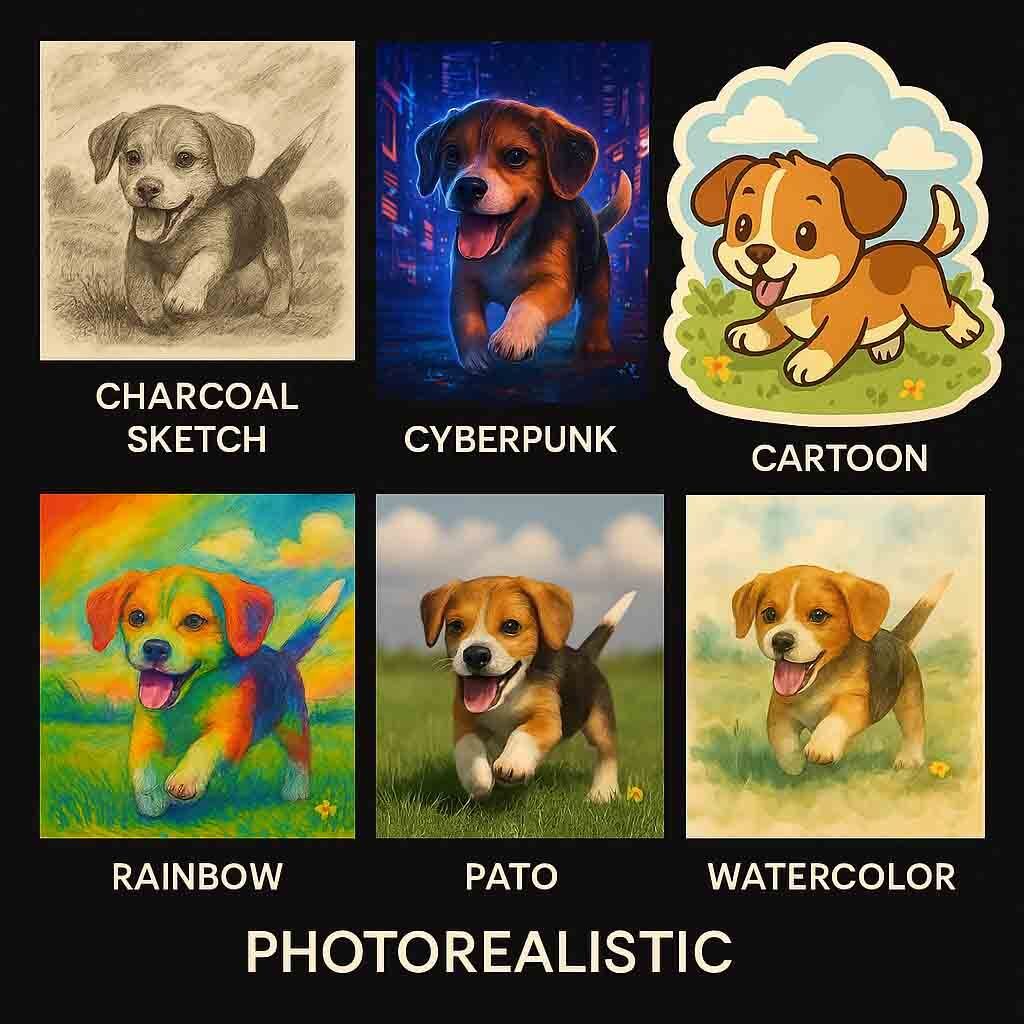

Multiple Styles

Multiple Styles, One Prompt: GPT‑4o can also mix and match styles within a single prompt. In a showcase titled “Transparent Layers,” the AI was asked to create individual sticker-style graphics with transparent backgrounds. For instance, it generated a puppy playing in a field in whatever style I requested.

It can similarly output icons, logos, or other assets ready to be composited, which is a boon for graphic designers.

I created all of these styles individually, then asked 4o to combine them with labels. Not bad, I just have no idea what “pato” means..

Of course, no AI tool is perfect. Next, let’s compare how GPT‑4o stacks up against both its predecessors and its competitors in the creative AI arena – and then address the limitations you should keep in mind.

Strengths and Limitations of the 4o Update

No tool is without its flaws, and GPT‑4o image generation is no exception. Let’s evaluate its strengths and then candidly examine the limitations that remain (some of which OpenAI has themselves acknowledged).

Major Strengths:

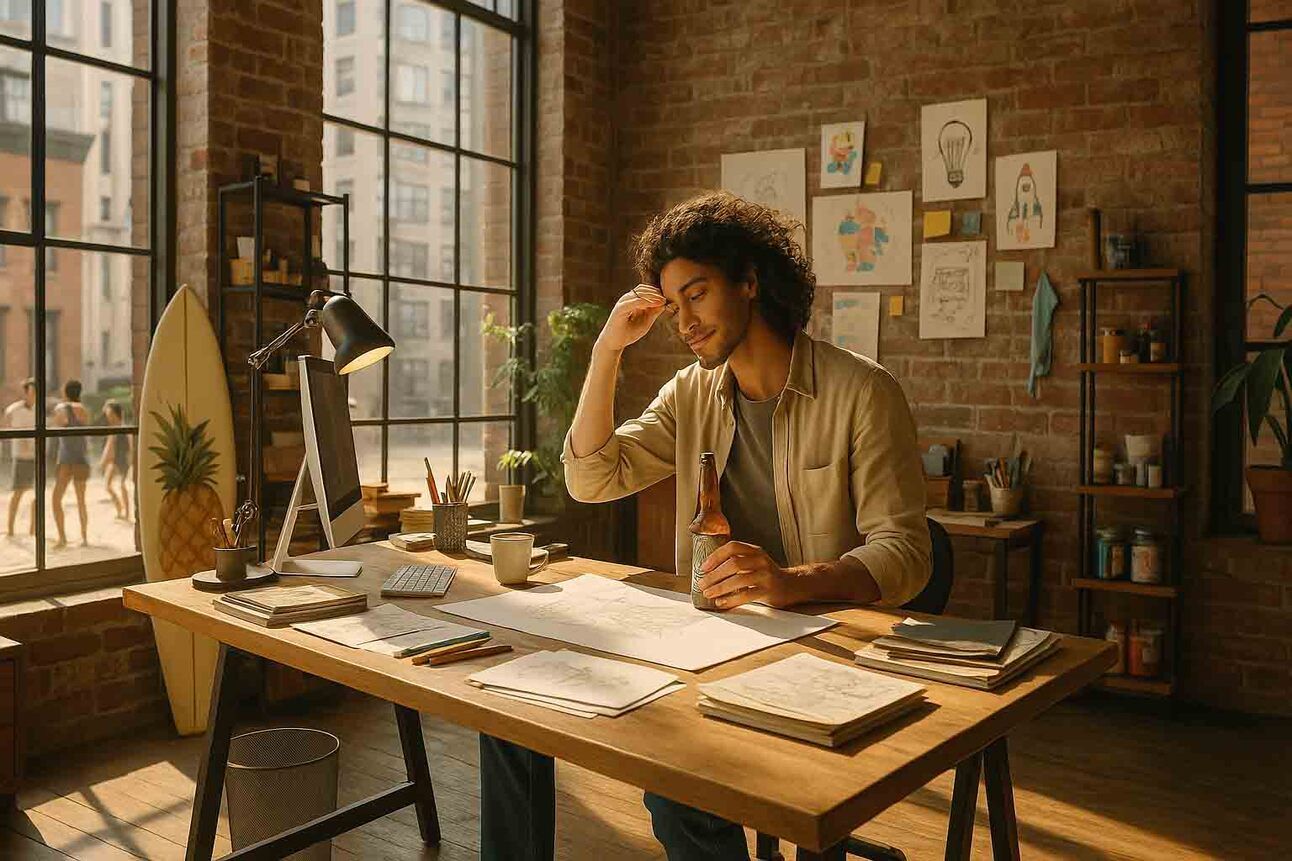

Seamless Creative Workflow: Perhaps the biggest win is how easy it is to use in a creative workflow. You can be chatting with GPT‑4o about campaign ideas, and then simply say “Actually, sketch out this idea for me as an image.” The model will produce an image without you ever leaving the chat. This tight integration means less context-switching – as a creator you don’t have to jump between a chat, an image tool, and Photoshop; ChatGPT becomes a one-stop creative assistant.

Precise and “Useful” Outputs: GPT‑4o’s focus on useful imagery (diagrams, infographics, etc.) means it often produces immediately usable results. In many of the demos, the images looked like they could slot straight into a presentation or social media post without much editing. The fact that you can get things like a fully designed menu or a multi-panel comic with correct text is a huge time-saver for creatives who need visuals that say something.

Leveraging GPT-4’s Knowledge: Since the image generator is part of GPT‑4o, it has the entire knowledge base of the language model to draw upon. This is a subtler strength but an important one. It means the AI understands context and concepts while drawing. For example, it knows who Isaac Newton is and what a prism experiment looks like, so when you ask for Newton demonstrating a prism, it can accurately render the setup.

Enhanced Creative Control: For artists and designers who want control, GPT‑4o offers new levers. The ability to specify exact colors and transparent backgrounds was mentioned – imagine asking an AI to create a logo and saying “use our brand blue #1A9FF2 as the background” and it does. That’s a level of control that previously usually required manual editing after generation.

Speed of Ideation: While generation is somewhat slow per image, the overall speed from idea to visual is still remarkable. For a content creator, being able to generate a visual mockup in one minute is transformative. Need three different concept sketches for a client? You can get them in ~3 minutes. This speeds up the ideation phase of creative work. You might still spend hours polishing the final, but you’ve skipped hours of initial drafting or hunting for reference images.

I tried the incredibly detailed prompt below, and after a little back-and-forth, 4o created a pretty incredible image:

A stunning woman with sun-kissed skin and windswept, wavy brunette hair stands confidently on a pristine tropical beach during golden hour. She wears a vibrant, high-fashion bikini with a modern twist—shimmering teal with gold accents—and a sheer, flowing beach kimono that flutters in the breeze. In one hand, she holds a sleek, cold bottle of craft beer with condensation beading down the glass, labeled in an elegant art-deco style logo. Her other hand shades her eyes as she gazes toward the horizon with a charismatic, inviting smile. Behind her, a group of friends plays beach volleyball in soft focus, while a branded surfboard with the beer logo leans against a palm tree. In the sky, a small banner plane trails a vibrant ribbon advertising the beer with the tagline “Taste the Sunset.” A playful golden retriever runs through the waves, and a sand sculpture of the beer bottle with tropical fruit garnish sits near her feet. Lens flares and warm sunlight dance across the scene, giving it a cinematic, magazine-cover-quality glow.

Aside from realism, the 4o model does a great job of include accurate text and multiple subject matter elements.

Current Limitations:

Despite all the strengths, GPT‑4o’s image generation has plenty of areas to improve. OpenAI themselves listed known issues, and hands-on experience validates many of them:

Slow Generation Time: As mentioned, each image can take up to a minute to render. If you’re used to DALL·E 3 spitting out four options in 20 seconds or Midjourney doing nearly real-time updates, this feels sluggish. For now, GPT‑4o only produces one image per request (often the “best of N” internally) rather than a grid of variants. So the iterative loop might be slower – one image, ask for change, wait, etc. OpenAI will likely optimize this, but it’s a factor to consider. In a fast-paced workflow, you might still use quicker models for rough drafts and reserve GPT‑4o for when you need that precision.

Image Cropping and Layout Quirks: One odd issue observed is that GPT‑4o sometimes crops images too tightly, especially for tall formats like posters. For example, it might cut off the bottom of a flyer or frame a scene awkwardly, requiring a redo. This suggests the model isn’t always “aware” of the canvas boundaries perfectly. Additionally, while it follows layout instructions well, it can occasionally misjudge spacing (perhaps jamming elements together).

Hallucinations and Mistakes: Yes, image models can hallucinate too! GPT‑4o can sometimes make up details that weren’t in the prompt, especially if the prompt was short or vague. For instance, a user might ask for a simple “woman riding a horse on a beach” and the AI might add an extra horse in the background or put a lighthouse on the shore that wasn’t mentioned – just because it “associates” beaches with lighthouses. These hallucinations are usually minor, but they can be problematic if you needed a very specific output.

“High Binding” Limit – Crowded Scenes: While GPT‑4o handles multiple elements better than before, there is a limit. OpenAI found that if you ask for a scene with more than ~10–20 distinct items or characters, the model may start struggling to bind all the concepts correctly. For example, generating an image of the entire periodic table (118 elements) with correct symbols is beyond it – it will likely make mistakes or repeats. Or a “Where’s Waldo” style crowd scene with dozens of unique characters might not have each one exactly as described.

Multilingual and Non-Latin Text: A notable limitation – GPT‑4o is weak at rendering non-English text in images. It was observed that it often flubs non-Latin scripts (like Chinese, Arabic, Hindi, etc.), producing characters that are incorrect or gibberish. Even certain accented characters in Latin alphabets might get distorted. So if you need, say, a bilingual poster with English and Japanese, you might get a good English portion but nonsense Japanese. This is an area they need to train better. For now, creatives working in languages other than English should be cautious – you may have to manually fix those parts or use a localized model if available.

Image Editing Precision: While you can request edits in the prompt, the model doesn’t always surgically edit only that part. OpenAI notes that asking to fix a specific portion (like “correct the spelling of a sign in the image”) can have side effects – it might alter other parts of the image unintentionally. For example, telling it to “change the car from red to blue” might also unexpectedly change the background for whatever internal reason. There’s also a known bug with face edits: if you upload a photo with a face and ask for slight changes across iterations, it might have trouble keeping the face the same between edits (OpenAI said they’re fixing a consistency bug there).

Small Text and Dense Information: If you try to cram a lot of tiny text into an image (e.g. a full page of a document or a very detailed chart with minuscule labels), the model will probably falter. It handles reasonably sized text well, but it’s not going to generate a legible credit card statement or a complex graph with dozens of tiny data labels. The resolution and model’s focus just aren’t there yet for very small text.

Content Restrictions and Bias: Creatives may bump into the guardrails OpenAI has set. The model is conservative about certain content – for example, it won’t generate real people’s faces (so no asking for a photorealistic “Tom Cruise doing X”, it will refuse), and it avoids overtly sexual or violent imagery as per policy. Satire and “edgy” art might be toned down. Sam Altman mentioned they tuned it to allow a bit more humor or mild offensiveness “within reason” – meaning it might allow some leeway for artistic expression (perhaps it won’t insta-block every politically incorrect joke image), but there’s still a firm line of appropriateness.

I asked 4o to create a YouTube thumbnail using the images I’d created - not great (and what’s going on with that spelling??)

Reality Check: It’s worth noting that many of these limitations are openly stated by OpenAI – they are aware and actively working on them. This transparency is good: it sets expectations for creators not to treat the AI as infallible. In practice, a savvy user can work around some issues (reframe the prompt, do minor manual edits after generation, etc.).

Some limitations will matter more to certain users than others. For example, if you never create multilingual content, you won’t care about that flaw. But if you often design posters in Japanese, it’s a showstopper for now.

On the flip side, GPT‑4o’s current strengths do outshine its weaknesses for many use cases. The limitations are mostly technical quirks, whereas the strengths unlock fundamentally new capabilities. As a creative, you quickly learn what it’s great at (and lean into those uses), and what to avoid or handle yourself.

Implications for Creative Workflows

You can finally use detailed prompts in 4o to generate incredibly accurate images.

So, how might these new features change the day-to-day for content creators, marketers, or artists? The impact of GPT‑4o image generation can be both exciting and disruptive:

Rapid Prototyping and Ideation: Brainstorming visuals used to require either an artist to sketch ideas or searching for reference images. Now, you can prototype visually in minutes. A marketing team could be chatting in a meeting and live-generate concept images via ChatGPT to illustrate each idea pitched. This makes the ideation phase much more dynamic. For instance, a content creator planning a YouTube video thumbnail can try out several thumbnail concepts (different layouts, colors, text) by just describing them to GPT‑4o and getting mockups. It lowers the barrier to visual thinking – even those who “can’t draw” can explore designs and compositions with the AI’s help.

Augmenting (Not Replacing) Creative Professionals: It’s important to note that while GPT‑4o can generate impressive visuals, it doesn’t replace the need for human creativity and oversight. Think of it as a very smart design assistant. A graphic designer might use it to generate base imagery or to overcome blank-canvas syndrome, then import the result into Illustrator or Photoshop to refine typography, adjust colors to exact brand standards, or tweak composition. In short, it can save time on the labor-intensive parts of creation, allowing the humans to focus more on the high-level creative decisions and fine details.

New Forms of Content: Having text and image generation in one place enables new types of content creation that blend modalities. For example, one could envision interactive storytelling where you and the AI co-create a story, and at key plot points the AI also generates an illustration of the scene. A content creator could literally “direct” a short comic or children’s book with the AI as both writer and illustrator alongside them. The integration opens up workflows where visual content is generated in context with written or spoken content, making the AI a versatile creative partner.

Challenges in Adopting the Tool: Creatives will also navigate some challenges adopting GPT‑4o. One is the learning curve of promptcraft – getting the best out of the model requires a new kind of skill: knowing how to describe visuals in text clearly and effectively. This blurs the line between copywriting and art direction. Some artists may feel frustrated initially if the image isn’t what they imagined, leading them to refine their prompting technique. On the flip side, non-artists might not know the art terminology to direct the AI (“what style am I looking for?”). OpenAI’s chat approach helps here because you can iteratively clarify (“the vibe is not right, maybe make it more minimalistic and use pastel colors”).

Ethical and Quality Considerations: Another aspect in workflows is validating and editing what the AI gives. Creatives will need to double-check facts in any infographic generated (the AI might present a plausible chart that is actually inaccurate if it hallucinated data). For commercial work, checking that the output doesn’t inadvertently copy a real image too closely (less likely given the diffusion nature, but an example could be it generates a famous painting lookalike). The inclusion of C2PA metadata in each image is a positive move for transparency – if you publish AI-generated visuals, they carry a flag that they were AI-made (in case someone down the line needs to verify authenticity).

Empowering Indie Creators and Small Teams: One very positive impact is empowerment of those with fewer resources. A solo entrepreneur can now generate pretty decent marketing visuals without hiring a graphic designer for each small task. An indie game developer can concept art their characters and worlds without a full art department, and use those concepts to attract funding or guide contractors later. A blogger can spice up articles with custom illustrations tailored to their exact content, instead of settling for generic stock photos.

Final Thoughts: A New Chapter for Creative AI

Not sure what he’s doing with his hand, but there’s no arguing the realism of OpenAI’s 4o model is impressive.

OpenAI’s GPT‑4o image generation release marks an impactful step in the evolution of creative technology. It brings us closer to an era where visuals are as easy to summon and shape as text, which for many of us is a mind-boggling development.

For creatives, the immediate impact is a toolkit that can accelerate production, unlock new workflows, and perhaps inspire new creative directions (after all, seeing an AI unexpectedly interpret your idea can spark ideas you hadn’t thought of). It lowers some barriers – you don’t need years of art training to compose a decent-looking image for your project – while raising new questions about the role of human artistry and the nature of visual truth.

This release is both exciting and a bit uneasy. Exciting because it empowers creativity in fresh ways; uneasy because it will force us to confront how we value human-made art, how we ensure originality and avoid a deluge of AI-generated sameness, and how we adapt our skills.

The update is explorative in that it opens many paths – from serious design to playful experimentation – and it invites creatives to be, in a sense, co-pilots with AI. It’s also important to stay critical: hype aside, the tool has limits and will produce clunky results or require manual fix-ups at times. Understanding those nuances will be key to using it effectively.

Ultimately, what makes this release impactful is that image generation is no longer a siloed novelty – it’s now a native capability of a general AI assistant. This means millions more people will be exposed to AI image creation (as it rolls out to even free ChatGPT users), and it will become commonplace to “ask ChatGPT to draw X”.

We’ll likely see an explosion of AI-created visuals across the web. For the creative community, embracing this technology thoughtfully – as a partner, a tool, and yes, sometimes a competitor – will be the challenge and opportunity of our time.

In a field that’s all about breaking boundaries between imagination and reality, GPT‑4o just pushed the boundary a little further. It’s up to us as creators to push it in the right direction.